Library Integration for IMU - Video Undistortion

|

|

Introduction

The previous step determined the distortion quaternion which represents the image's rotation that causes the video instability. In this case, we assume that all the movement can be expressed in terms of rotations and fixed by re-orientating the image in a spherical plane. For this, it is crucial to know how the camera behaves in the real-world coordinates for the proper re-orientation. This section will cover the process of undistorting the image using the RidgeRun Video Stabilization Library.

Using the Library to Undistort

The RidgeRun Video Stabilization Library performs the undistortion using an accelerated execution backend. It receives two images (one input, another the output), the distortion quaternion from the previous step and the field-of-view scale, where a value greater than 1 means that the resulting image will be zoomed out.

Overall, the technique behind the undistortion is the use of the fish-eye model with fisheye lens distortion, where the camera matrix is given by:

where and are the focal distances in the x and y axis, and and are half of the input width and height, respectively. For better stabilisation results, it is highly recommended to calibrate the camera to get the intrinsic camera matrix. The following section will dig into this topic in detail.

After that, we need to scale the camera matrix that will be applied in order to scale the image. The new camera matrix is given by:

where and are and values multiplied by the inverse of the FOV scale, and and are the halves of the output width and height. This matrix is computed automatically and no intervention is needed by the user.

Apart from the intrinsic camera matrix and the new camera matrix, it is possible to add the fish-eye distortion parameters (often known as k1, k2, k3 and k4). To get the distortion parameters, camera calibration is compulsory.

The map creation involves those two camera matrices and a rotation extracted from the quaternion, as it is in the initUndistortRectifyMap from OpenCV.

RidgeRun Video Stabilization Camera Calibration Tool

The library includes a tool to help during the camera calibration process. This tool is called rvs-camera-calibration and it is intended for evaluation purposes. It allows:

- Capturing: captures the frames for calibration. It must be run with the camera connected. It does not require the IMU to work.

- Calibrating: gets the captured frames and generates the matrices. It can be run on another platform or computer.

- Undistort: tests the matrices on the captured frames. It can be run on another platform or computer.

The options are:

---------------------------------------------------------

RidgeRun Video Stabilisation Library

Camera Calibration Tool

---------------------------------------------------------

Usage:

rvs-camera-calibration -m MODE -c v4l2 -d CAMERA_NUMBER -o PATH -i PATH -s NUMBER_SAMPLES [-p] [-q]

Options:

-h: prints this message

-m: application mode:

capture: capture the image samples for calibration.

Requires -o to be defined

calibration: perform the calibration with the samples

Requires -i to be defined with images in the

format ###.jpg. Saves the files into the fil

e calibration.yml

undistort: test the undistortion parameters.

Requires -i to be defined with images in the

format ###.jpg. Loads the files into the fil

e calibration.yml

Also requires -o to be defined to save the

undistorted images

-d: camera number. It is the camera or sensor identifier

For example: 0 for /dev/video0

-c: capture source (already demosaiced). Selects the

video source. For example: argus or v4l2. Default:

argus

-t: technique. Selects the algorithm for calibration and

correction. Default: (fisheye). Other: brown

-s: number of samples. Number of images to take during

the capture mode. Default: 50

-o: output path. Existing output folder to save the

samples: For example: ./samples/

-i: input path. Existing input folder to retrieve the

samples: For example: ./samples/

-k: detect chessboard. Perform detection of the pattern

during capture

-p: preview the images, approving by pressing Y and

cancelling with N. It helps to discard bad samples.

Default: disabled

The usage will be covered in the following sections

Calibrating the Camera

This section covers the theory, foundations and practical tips for calibrating the camera for Video Stabilization. This process is critical for proper stabilization and enhancing the results' quality. This section also covers the usage of the integrated calibration tool and its use along the different calibration stages.

Theory

The camera calibration process finds the intrinsic camera matrix that represents a mapping between the image space (in pixels) framework and the image's real-world metric system. In other words, it maps from pixels on the image to physical units in mm. This gives an idea of the camera surroundings and the overall imaging system.

On the other hand, the calibration process also finds the distortion parameters, which describe the distortion due to the curvature of the fisheye lens.

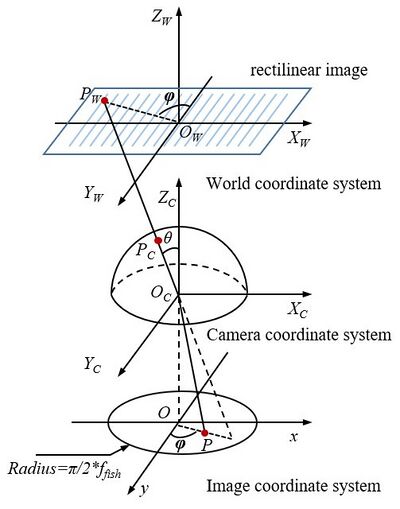

The following image illustrates the entire process, where the intrinsic camera matrix helps to go from the Image Coordinate System (at the bottom) to the World Coordinate System (at the mid-top). The distortion parameters help to transition from the Camera Coordinate System (semisphere) to the rectilinear system (plain image).

The working principle of the stabilization library is based on the rotation of the camera lens with respect to the origin of the image plane.

The RidgeRun Video Stabilization Library is flexible in terms of calibration, allowing a lax calibration that can work for any camera if and only if they use the same lens (model and manufacturer) and image sensor. This means that, for mass production, only one camera needs calibration, and it is possible to generalise without significant quality loss.

Calibration Pattern

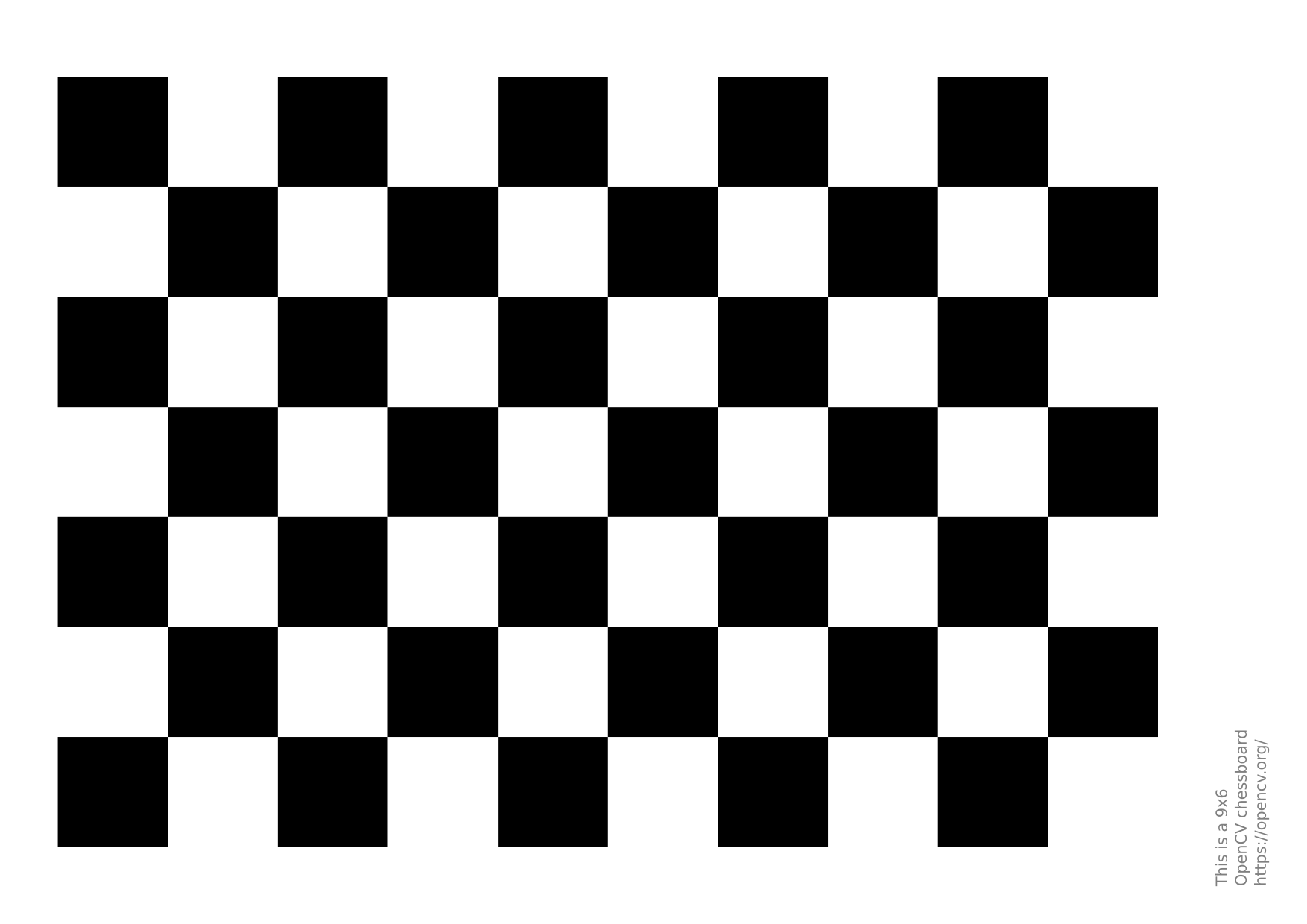

Camera calibration requires a known pattern with known dimensions to properly translate the image coordinate system to the world coordinate system. Moreover, it must be as uniform as possible to highlight lines to determine the distortion coefficients.

Our suggestion is to print the chessboard pattern on a solid surface and rigid as follows:

GitHub Link for chessboard pattern. It is a 9x6 pattern with 25mm squares that can be printed on a letter-size/A4 piece of paper.

Sampling

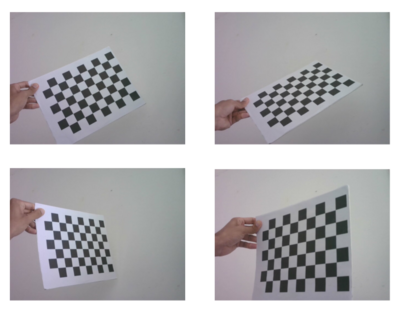

After printing the calibration pattern (chessboard), it is time to take some pictures. They must comply with the following criteria:

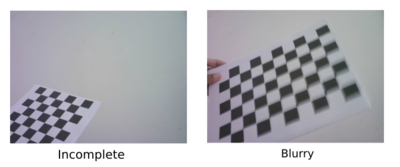

- No blur: the idea is to have a clear image without movement.

- Always in the scene: the chessboard must always be completely visible in the scene.

- Final image dimensions: use the image dimensions used in production during calibration. If the production environment uses 1080p images, the calibration should also use 1080p for better results.

- Good amount of images: take at least 50 samples for image calibration.

During the capture, the chessboard must be placed in different image regions using different positions, such as rotation in the three axes and translations.

Good Samples:

Bad Calibration Samples:

Using the RidgeRun Video Stabilization Tool

The capture mode of the tool allows for capturing pictures from the camera and storing them in a sequence for later calibration. It uses OpenCV and GStreamer to capture and is compatible with V4L2 and NvArgus cameras.

The use of the capture mode is the following:

# Camera index: equivalent to device /dev/video0 in V4L2 or sensor-id=0 in NvArgus CAMERA_INDEX=0 # Resolution: must match the final stabilization resolution WIDTH=1280 HEIGHT=720 # Output folder: where the images are stored. Must exist before running the tool OUTPUT=./samples # Capture device: Options are v4l2 or argus DEV=v4l2 # Tool rvs-camera-calibration -m capture -o ./samples -d $CAMERA_INDEX -width $WIDTH -height $HEIGHT -c $DEV -k

The options for the capture are:

Options:

-d: camera number. It is the camera or sensor identifier

For example: 0 for /dev/video0

-c: capture source (already demosaiced). Selects the

video source. For example: argus or v4l2. Default:

argus

-s: number of samples. Number of images to take during

the capture mode. Default: 50

-o: output path. Existing output folder to save the

samples: For example: ./samples/

-k: detect chessboard. Perform detection of the pattern

during capture. Helps with verification.

-p: preview the images, approving by pressing Y and

cancelling with N. It helps to discard bad samples.

Default: disabled.

While the tool is running, it will prompt in the command line whether to capture or not based on the preview. So the user can select pictures of better quality and discard bad samples. An example of the instructions is the following:

[INFO]: Capturing new frames. Please, take into account

Press Enter to capture.

Type 'n' and press Enter to discard and capture a new one.

Type 'q' and press Enter to exit.

[INFO]: Recommendation: perform captures every two seconds or more

[INFO]: New frame captured: 0

n <---------- an 'n' was typed to discard the frame 0

[INFO]: New frame captured: 1

<---------- an 'Enter' was typed to save the frame 1

[INFO]: Saving into: ./samples/1.jpg

[INFO]: New frame captured: 2

<---------- an 'Enter' was typed to save the frame 2

[INFO]: Saving into: ./samples/2.jpg

[INFO]: New frame captured: 3

q <---------- a 'q' was typed to exit

[WARN]: Exiting early...

[INFO]: Terminating...

Calibration Computation

In the calibration stage, the calibration algorithm will identify the chessboard joining points. In this case, the algorithm first passes over all the images, where it is possible to check that the images are proper for calibration. An image is adequate if all the joint points are well-identified and well-connected. The columns of the chessboard must be connected through vertical lines, as illustrated by the following picture:

After verifying and classifying all images, the algorithm will take only the images classified as adequate and proceed to the computation of the camera matrix and the distortion parameters. The camera matrix is a 3x3 matrix, and the distortion parameters are a 1x4 matrix.

Using the RidgeRun Video Stabilization Tool

The calibration mode of the tool allows for calibrating the camera from already taken pictures from the camera. During the process, the user can select the samples with the chessboard clearly detected and discard the dubious ones. It uses the OpenCV library to calibrate the Fisheye and Brown-Comrady lens models.

The use of the calibration mode is the following:

# Input folder: where the images were stored from the previous step. Must exist before running the tool INPUT=./samples # Model: distortion model. Options: brown, fisheye MODEL=fisheye # Tool rvs-camera-calibration -m calibration -i $INPUT -t $MODEL

The options for calibration are:

Options:

-t: technique. Selects the algorithm for calibration and

correction. Default: (fisheye). Other: brown

-i: input path. Existing input folder to retrieve the

samples: For example: ./samples/

-k: detect chessboard. Perform detection of the pattern

during capture

-p: preview the images, approving by pressing Y and

cancelling with N. It helps to discard bad samples.

Default: disabled.

While the tool is running, it will prompt in the command line whether to capture or not based on the preview. So the user can select pictures of better quality and discard bad samples. An example of the instructions is the following:

---------------------------------------------------------

RidgeRun Video Stabilisation Library

Camera Calibration Tool

---------------------------------------------------------

[INFO]: Launching calibration tool

[INFO]: Selecting frames and calibrating. Please, take into account

Press Enter to accept the frame and continue.

Type 'n' and press Enter to discard.

Type 'q' and press Enter to exit.

[INFO]: Calibrating with: brown-comrady

[INFO]: Reading file: ./samples/1.jpg

[WARN]: Cannot find the chessboard within the sample. Discarding... <--- When using -k

[WARN]: Chessboard not found. This image will be discarded

[INFO]: Reading file: ./samples/10.jpg

<--- When accepting the frame

[INFO]: Image taken for calibration

[INFO]: Reading file: ./samples/11.jpg

q <--- When exiting

[WARN]: Exiting early...

[INFO]: RMS: 0.355463

[INFO]: Camera matrix:

{811.16, 0, 240.5040, 809.157, 216.635001}

[INFO]: Distortion:

{-0.236816, 8.93896, -0.00620267, -0.0323884}

[INFO]: Terminating...

At the end of the calibration, it will print the matrices in a C-friendly format. Moreover, it will generate a calibration.yml file compatible with cv::FileStorage. These matrices should be used for GStreamer or a custom application to get better-quality results. In the case of GStreamer, set the properties:

- undistort-intrinsic-matrix:

undistort-intrinsic-matrix="<811.16, 0, 240.5040, 809.157, 216.635001>" - undistort-distortion-coefficients:

undistort-distortion-coefficients="<0, 0, 0, 0>"

Testing the Matrices

In the final stage of the calibration, the matrices are tested on the samples to identify possible issues during the calibration process. Some details to pay attention to are:

- The lines of the chessboard and other objects must be straight.

- The borders must change at least slightly.

In fisheye lenses, the roundness near the centre must disappear, highlighting lines and changing the borders.

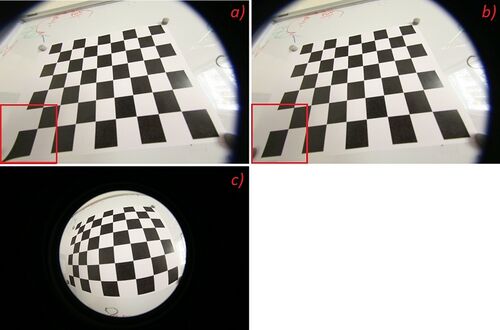

In the image presented above, there are some important details to take into account:

- The A) and B) are undistorted images using the computed values.

- The C) is the original image captured by fisheye lenses.

Please notice that the A) has an issue in one of the chessboard squares. This is a sign of a bad calibration. The B) illustrates a case where the calibration is successful, highlighted by the straight lines of the chessboard.

Using the RidgeRun Video Stabilization Tool

The undistort mode of the tool allows for testing pictures already taken from the camera and storing the undistorted ones in a sequence. It can undistort using Fisheye and Brown-Comrady lens models.

The use of the undistort mode is the following:

# Input folder: where the distorted images are stored. Must exist before running the tool INPUT=./samples # Output folder: where the images are stored. Must exist before running the tool OUTPUT=./samples-undistorted # Model MODEL=fisheye # Tool rvs-camera-calibration -m undistort -i $INPUT -o $OUTPUT -t $MODEL -p

The options are:

Options:

-t: technique. Selects the algorithm for calibration and

correction. Default: (fisheye). Other: brown

-o: output path. Existing output folder to save the

samples: For example: ./samples/

-i: input path. Existing input folder to retrieve the

samples: For example: ./samples/

-p: preview the images, approving by pressing Y and

cancelling with N. It helps to discard bad samples.

Default: disabled.

An example of usage:

--------------------------------------------------------- RidgeRun Video Stabilisation Library Camera Calibration Tool --------------------------------------------------------- [INFO]: Launching undistortion tool [INFO]: Undistorting with: brown-comrady [INFO]: Loaded camera matrix: [811.1599197236691, 0, 240.5041687070753; 0, 809.1568154990285, 216.6352609536164; 0, 0, 1] [INFO]: Loaded distortion coefficients: [-0.2368160042337804, 8.938961895909738, -0.00620267258069656, -0.03238835791507231, -88.68282698126136] [INFO]: Reading file: ./samples/1.jpg [INFO]: Saving undistorted file: ./samples-undistorted/1.jpg [INFO]: Reading file: ./samples/10.jpg [INFO]: Saving undistorted file: ./samples-undistorted/2.jpg [INFO]: Reading file: ./samples/11.jpg

The matrices are loaded from the calibration.yml, and the frames can be passed by pressing enter.

Create the Runtime Settings

In case you want to integrate RVS into your code base

The first step is to create the runtime settings. This process allows the backend configuration to use specific details of an application, such as work sizes, queues, platforms or contexts in OpenCL, CUDA Devices, and Streams in CUDA. If the runtime settings are not provided, the backend will create its runtime settings using the defaults, the first available device and default work sizes and create its working queue.

For the OpenCV (or CPU) execution, there is no need to use the runtime settings. However, for OpenCL, you can adjust one of the following:

- Local Size: Determines the size of the workgroup (default 8x4).

- Device Index: Specifies which device the backend must take from the platform (used in case context and queues are not defined).

- Platform Index: Specifies which platform the backend must take from the system (used in case context and queues are not defined).

- Context: Sets an existing context to use (does not use the device nor the platform index).

- Queue: Sets an existing work queue to use (does not use the device nor the platform index).

The following snippets indicate two examples of how to create the runtime settings for the backend:

// Create the settings auto settings = std::make_shared<OpenCLRuntimeSettings>(); // Set the devices to create a new context within the backend settings->platform_index = 0; settings->device_index = 0; // Ready to use

Or:

// Create the settings

uint platform_index = 0;

uint device_index = 0;

auto settings = std::make_shared<OpenCLRuntimeSettings>();

// Create the platform

std::vector<cl::Platform> platforms;

cl::Platform::get(&platforms);

cl_context_properties properties[] =

{CL_CONTEXT_PLATFORM, (cl_context_properties)(platforms[platform_index])(), 0};

// Create the context

auto context = std::make_shared<cl::Context>(CL_DEVICE_TYPE_GPU, properties);

std::vector<cl::Device> devices = context.getInfo<CL_CONTEXT_DEVICES>();

// Create the queue

auto queue = std::make_shared<cl::CommandQueue>(context, devices[device_index], 0, &err);

// Set the settings

settings->platform_index = platform_index;

settings->device_index = device_index;

settings->context = context;

settings->queue = queue;

Create the Undistort Instance

The undistort must match the runtime settings. At this moment, the available undistort instances are:

- kFishEyeOpenCV: uses the OpenCV backend.

- kFishEyeOpenCL: uses the OpenCL backend.

- kFishEyeCUDA: coming soon.

To create a new undistort instance:

// Assumes that settings are a valid OpenCLRuntimeSettings shared pointer

auto undistort_cl =

IUndistort::Build(UndistortAlgorithms::kFishEyeOpenCL, settings);

You can leave settings as a nullptr to have a backend with default settings.

Correcting the Image

Once the undistort instance is created, continue computing the matrix this way:

undistort->SetCameraMatrices(kCamMatrix, kDistCoeffs, kCalSize[0],

kCalSize[1]);

After that, you can use the Apply method:

// Assumes that undistort_cl is created as above, // inimage and outimage are IImage shared pointers // and rotation is a quaternion proceeding from the // stabilization algorithm std::shared_ptr<IImage> inimage, outimage; Quaternion<double> rotation; double fov = 1.5; undistort_cl->Apply(inimage, outimage, rotation, fov);

After invoking the Apply method, the outimage is the resulting image, ready to use.